Working with Amazon S3 (Simple Storage Service)

January 17, 2015 Leave a comment

Amazon S3 (Simple storage service) is “in the cloud” or internet based storage service. It is managed by Amazon in their data centers world wide and provides highly available, secure and scalable solution for storage. The objects or files stored on this storage can be retrieved & saved via REST interface or APIs provided by Amazon.

The objects stored on S3 can be used in your web/mobile applications, websites, Big data analytics or for backup and archiving. It provides cost effective solution by on demand basis need of storage space and pay according to usage. The prices of storing, archiving, retrieving and transfer can be found here.

According to S3 storage architecture the objects are stored in logical containers called buckets. Buckets are tied to your amazon account and logically infinite number of objects can be stored in the bucket. When objects are stored in the bucket they can be accessed by a unique URL. There are two styles of URLs to access the buckets one is virtual hosted style URL and the other is path styled URL. The difference is in virtual style bucket name is part of the domain name where in path style it is not. You can see the examples below.

virtual host style URL example : http://bucketname.s3.amazonaws.com

path style URL example: http://s3.amazonaws.com/bucketname

Access to buckets and objects are regulated by ACL (Access control list). Each user in AWS account can be given permissions grants to buckets and objects.

In this post we will try to do basic operations with buckets and objects with .NET API. You can do the same with AWS console commands and some with REST Services. The difference between .NET API and REST API is that REST APIs are low level APIs and give us more control over transfer of data. In later posts I will try to work with REST APIs.

Using .NET SDK buckets can be created via simple classes and methods in Amazon.S3 namespace. The namespace is included in the project after referencing AWSSDK assembly. Assemblies and namespaces will be included by default by creating AWS projects from AWS templates.

1- Create a Bucket

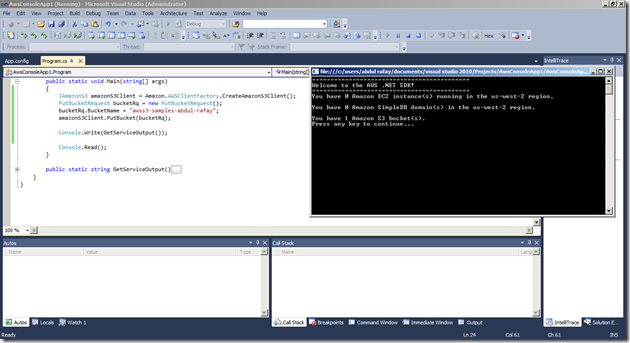

In the below code bucket is created in S3 with a few lines of code. The first step is to create amazonS3Client object from AWSClientFactory. It is a generic client object through which any type of S3 request can be submitted.

The client takes the type of request object as a parameter. For creating a bucket PutBucketRequest object is created and the name of the bucket is specified.

After the call following is the output and bucket named “awss3-samples-abdul-rafay” is created.

2- Write an object in the bucket

With a similar pattern the PutObjectRequest type of object is created and passed to the AWSS3Client object.

The parameters (ContentBody, BucketName, Key) as shown below is set for the object of PutObjectRequest type.

Please note that there are several other parameters (like metadata) can be set but depends on the type and nature of object to be stored.

Following is the output after the object named “Test Object Key” is created in the bucket.

You can see the object created in the bucket which can be browsed from AWS Explorer in VS2010.

3- Retrieve object from S3 and write the contents in the file

The GetObjectRequest type of object is passed to the S3Client object and the object which was stored earlier can be retrieved by passing the key and the bucket name. The key should be unique per bucket and if any other object is overwritten with the same key it will be overridden. It is up to the application to ensure uniqueness and object locking when objects are retrieved and written.

Below is the output of the code and the object is read and saved in the file on the desktop with the same name.

4- Listing objects in the bucket

ListObjectRequest type of object is passed to the S3Client object with BucketName as a parameter.

5- Deleting object

DeleteObjectRequest type object is passed to S3 Client with BucketName and Key as a parameter.

The object is deleted from the bucket.